📚 TABLE OF CONTENTS

In the vast realm of computing, where complex algorithms orchestrate actions and data flows seamlessly, it all boils down to the tiniest units of information: bits and bytes. These minuscule entities, often overlooked, are the foundation upon which the digital world is built.

Bits and Bytes Defined

At the heart of all digital systems lies the concept of binary code. This binary code is made up of two fundamental symbols: 0 and 1.

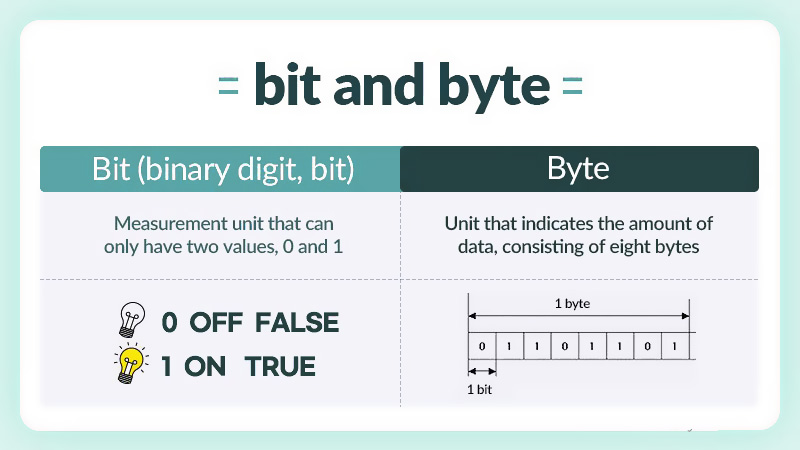

These symbols are used to represent the smallest unit of data – the bit. A bit, short for binary digit, is the basic unit of information in computing. It can hold one of two values, 0 or 1, embodying the essence of digital communication: presence or absence, true or false, on or off.

FYI: If you want to calculate a text or string size to Bit, Byte, KB, or MB you can use Text Size Calculator tool.

Bit

- a "bit" is atomic: the smallest unit of storage

- A bit stores just a 0 or 1

- "In the computer it's all 0's and 1's" ... bits

- Anything with two separate states can store 1 bit

- In a chip: electric charge = 0/1

- In a hard drive: spots of North/South magnetism = 0/1

- A bit is too small to be much use

- Group 8 bits together to make 1 byte

Byte

Bits, however, are not solitary creatures. They come together in groups of eight to form what is known as a byte. A byte, often represented as a combination of eight bits, is a more meaningful unit of data. It's the byte that allows computers to represent characters, numbers, and even more complex forms of data.

- One byte = collection of 8 bits

- e.g. 0 1 0 1 1 0 1 0

- One byte can store one character, e.g. 'A' or 'x' or '$'

Information Encoding

Now that we understand the building blocks – bits and bytes – let's delve into how they encode the information that drives our digital interactions. Imagine a simple scenario: sending the letter "A" to a friend via text. Behind the scenes, this seemingly straightforward action involves a fascinating dance of bits and bytes.

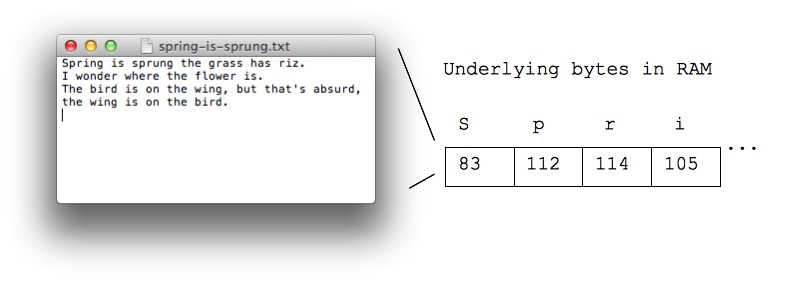

The letter "A" is represented using a standard called ASCII (American Standard Code for Information Interchange).

In ASCII, the letter "A" is assigned a unique numerical value, which is then encoded using a series of bits. These bits are transmitted to your friend's device, where they are decoded back into the familiar "A" on their screen.

This encoding process applies to everything from text messages to high-definition images and complex software applications. Every piece of data is broken down into bits and then transformed into bytes for storage and transmission. When it's time to retrieve the data, the process is reversed, and those bits and bytes are reassembled to recreate the original information.

Facts

- Each letter is stored in a byte, as below.

- 100 typed letters take up 100 bytes.

- When you send, say, a text message, the numbers are sent!

- Text is quite compact, using few bytes, compared to images, etc.

The Power of Bits and Bytes

The significance of bits and bytes cannot be overstated. They are the fundamental currency of the digital world, facilitating the exchange of information across the vast expanse of the internet, powering applications, and making communication between devices possible. Without these basic units of data, the technological landscape we've come to rely on would simply cease to function.

Consider the colossal datasets streaming through cloud services, the intricate web of interconnected devices in the Internet of Things (IoT), and the immersive experiences of virtual reality – all of these innovations are underpinned by the principles of bits and bytes. Whether you're sending an email, streaming a movie, or conducting a video call, these tiny entities are working tirelessly behind the scenes to make it all happen.

Conclusion

Understanding bits and byte's role in encoding and transmitting information gives us a deeper appreciation for the intricacies of the digital world. As technology continues to evolve, the concepts of bits and bytes remain constant, reminding us of the elegant simplicity that underlies the complexity of modern computing.

Guest